Disclaimer: Preview version; content and features subject to change.

This tutorial is geared towards any researcher and developer who wants to gain familiarity with VHToolkit 2.0.

The VHToolkit 2.0 standalone application features a set of example scenes that display key capabilities of the project.

Binaries for the VHToolkit 2.0 standalone application are available for the following platforms:

Android, iOS and WebGL support to be explored in future updates.

Disclaimer: Some capabilities and features may not be available on all platforms. All use is governed by the License Agreement.

Refer to Unity documentation for Player applications.

The process is simple to acquire the VHToolkit 2.0 binary executable:

Instructions for launch and navigation of the VHToolkit 2.0 binary (standalone) application.

A simple LevelSelect scene serves as a hub to launch the example scenes for the VHToolkit 2.0 project.

ExampleNLP, see the section below for basic use of this scene. If you wish to customize the scene, see the Add New NLP Service tutorial.

Visit the other tutorial pages for VH Sandbox Example and Create and Hook Up New Chatbot for specific scene details and instruction.

When in a particular scene, the following controls are commonly available:

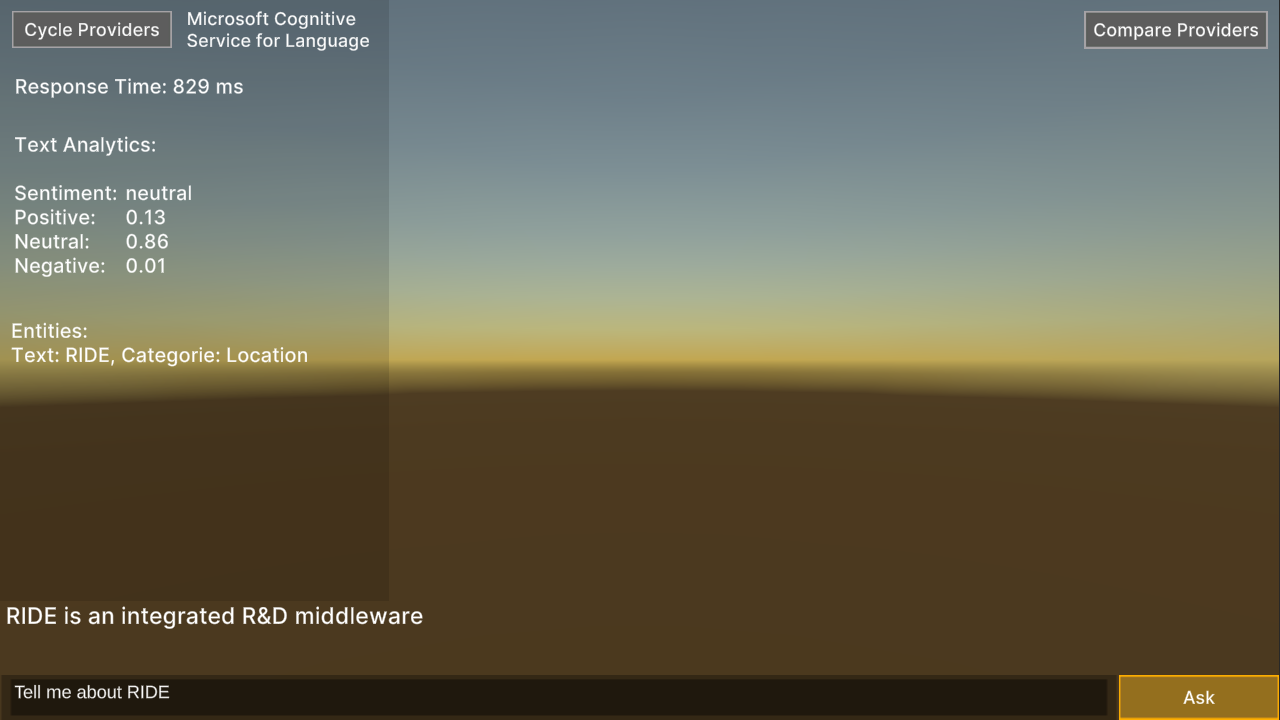

Certain scenes utilize a debug menu that appears in the upper-left corner by default.

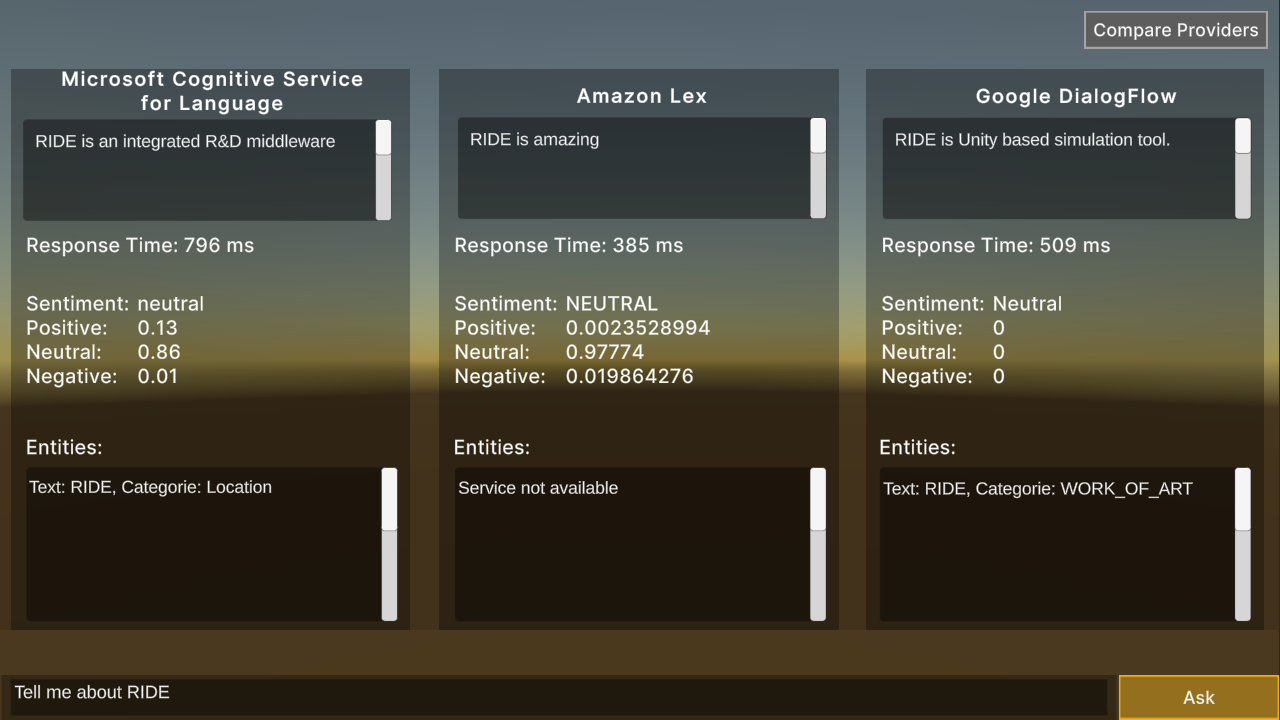

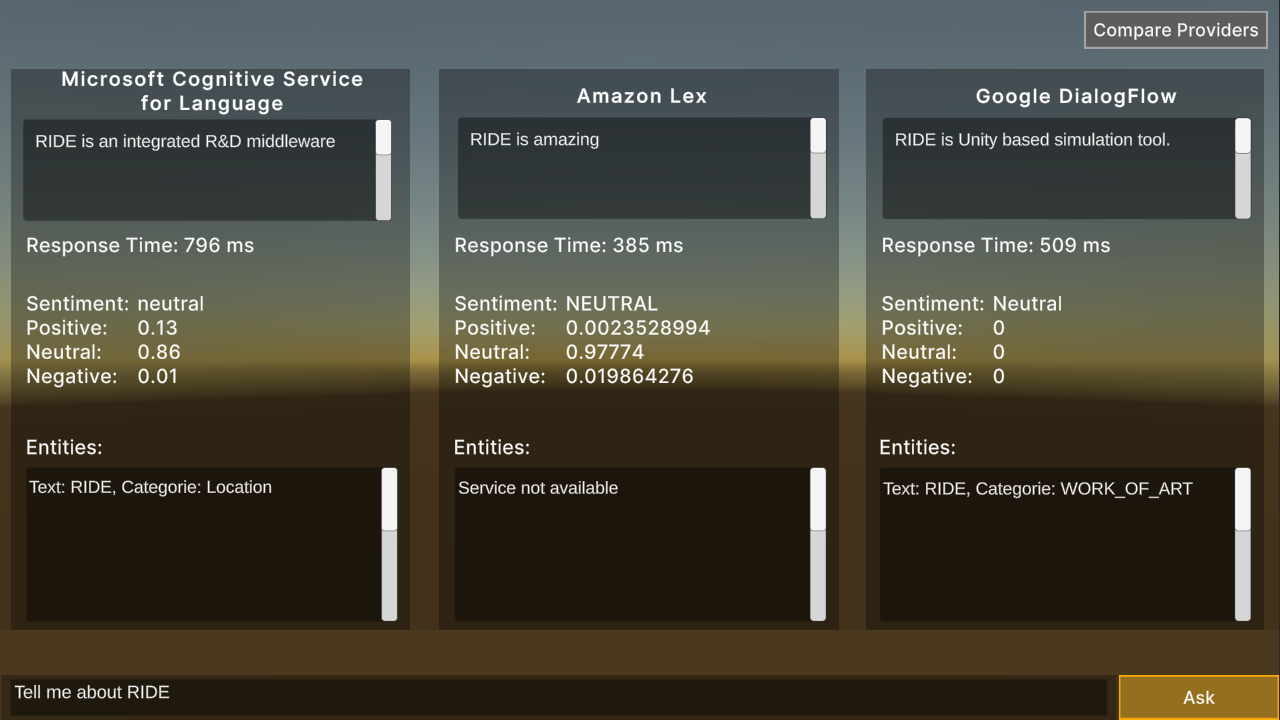

Shows how to leverage commodity NLP AI services through REST calls, using RIDE’s common web services system.

Leverages RIDE’s generic NLP C# interface, primarily focused on question-answering interactions, with the principle generally working for user input and agent output. Optionally includes sentiment analysis and entity analysis.

|

IMPORTANT If you wish to use the ExampleNLP scene and related web services, you must update your local RIDE config file (ride.json). Please back-up any local changes to the file, including your terrain key. Next, restore your Config to default using the corresponding option in the Config debug menu at run-time, then reapply any customization and terrain key. Refer to the Config File page within Support for more information. |

Test the different types of inputs, different lengths of responses, and measure metrics as the average response time for both “hot” and “cold” services.

A service is said to be “cold” when the service requesting the NLP agent makes the very 1st request just after its initialization and deployment and the agent has not been used for a while (here leaving all the respective platform agents idle for >=5 minutes).

When the service requesting the NLP agent has already made very 1st request just after its initialization and deployment, any subsequent service request is considered “hot”.

Note that most services are domain specific, with just a handful of questions and answers authored, purely for demonstration purposes. Example question: “What is RIDE?” The exception is OpenAI GPT-3, which is a general purpose language model.

Ask the same question and see how the output differs.

Assets/Ride (local)/Examples/NLP/ExampleNLP.unity

The ExampleNLP scene utilizes a customizable UI and interchangeable service providers through canvas, scripts and prefabs. Explore the objects in the Hierarchy view for the scene inside the Unity editor and source for Ride.NLP via the API documentation.

The main script is ExampleNLP.cs in the same folder.

Any NLP option implements the INLPQnASystem C# Interface, which itself is based on INLPSystem. These systems provide the main building blocks to interface with an NLP service:

OpenAIGPT3System m_openAIGPT3;

var openAIGPT3Component = new GameObject("OpenAIGPT3System").AddComponent<OpenAIGPT3System>();

m_openAIGPT3 = openAIGPT3Component;

The specific endpoint and authentication information is defined through RIDE’s configuration system:

m_configSystems = Globals.api.systemAccessSystem.GetSystem<RideConfigSystem>();

openAIGPT3Component.m_uri = m_configSystems.config.openAI.endpoint;

openAIGPT3Component.m_authorizationKey = m_configSystems.config.openAI.endpointKey;

The NLP system encapsulates the user question into an NLPQnAQuestion or NLPRequest object. This user input is sent through the RIDE common web services system. The results are encapsulated into an NLPQnAAnswer or NLPResponse object. A provided callback function allows a client (in this case this ExampleNLP) what specifically to do with the result. The NLP system itself handles much of the work, so that the client only requires one line to send the user question:

m_openAIGPT3.AskQuestion(userInput, OnCompleteAnswer);