Example scenes pertaining to a set of related RIDE API capabilities.

Explore a scene to learn more about the API.

Instance Red/Blue agents through script at runtime.

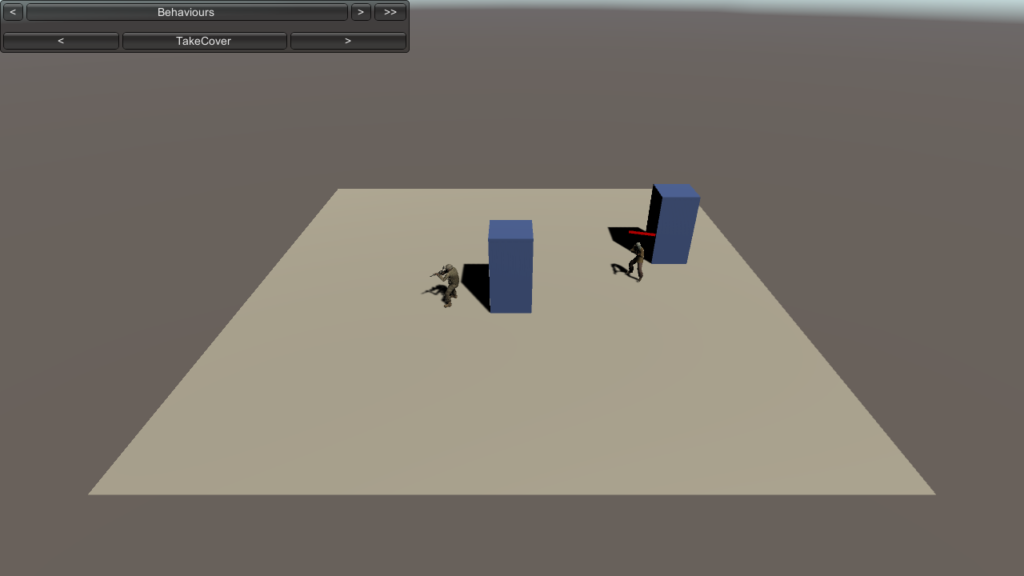

Demonstrates a code-driven set of general and custom agent behaviors for a unit.

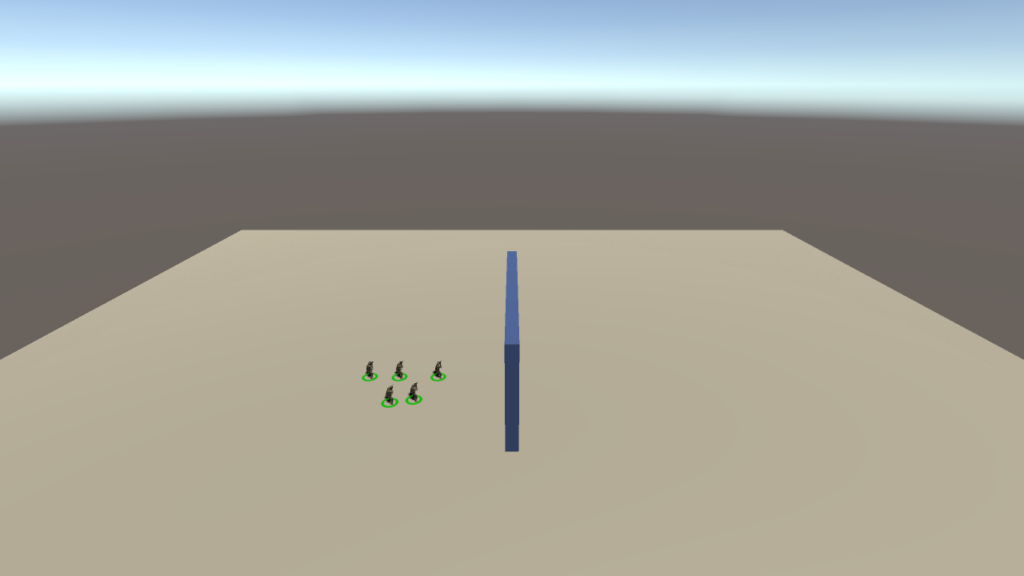

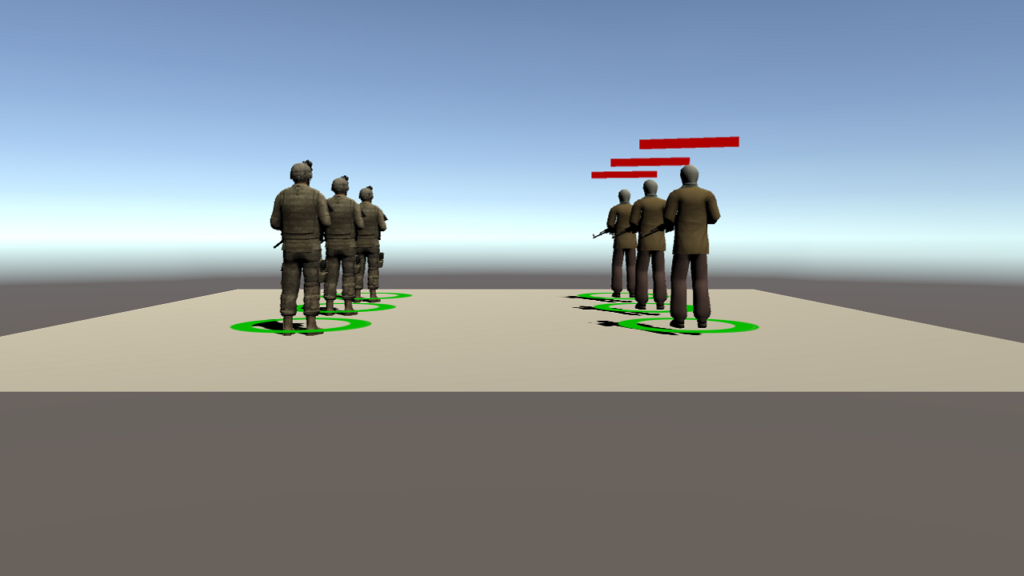

Command agents with basic real-time strategy (RTS) control type input. Displays a selector marquee in a specified color, with selected agents displaying a circular icon at their base.

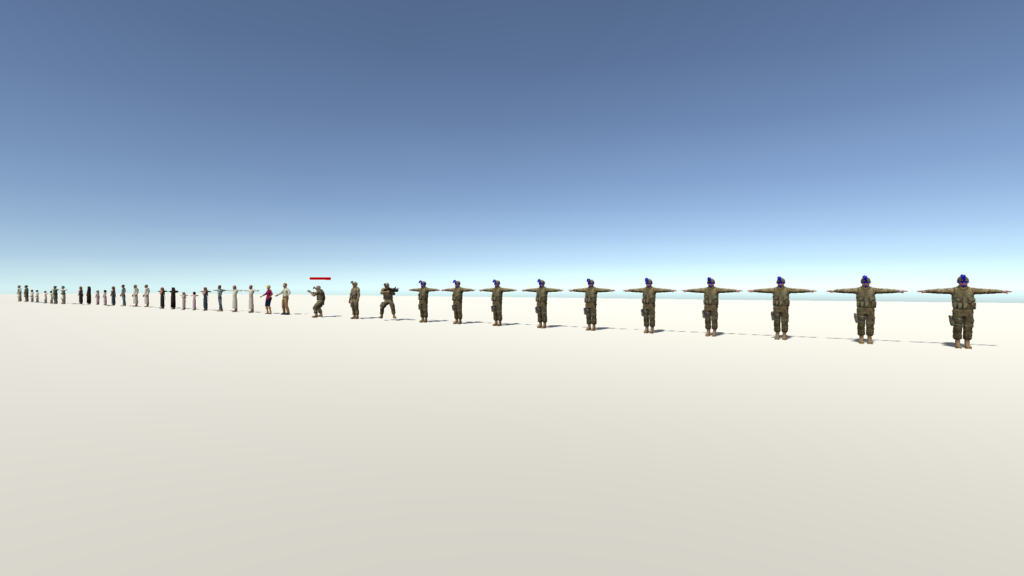

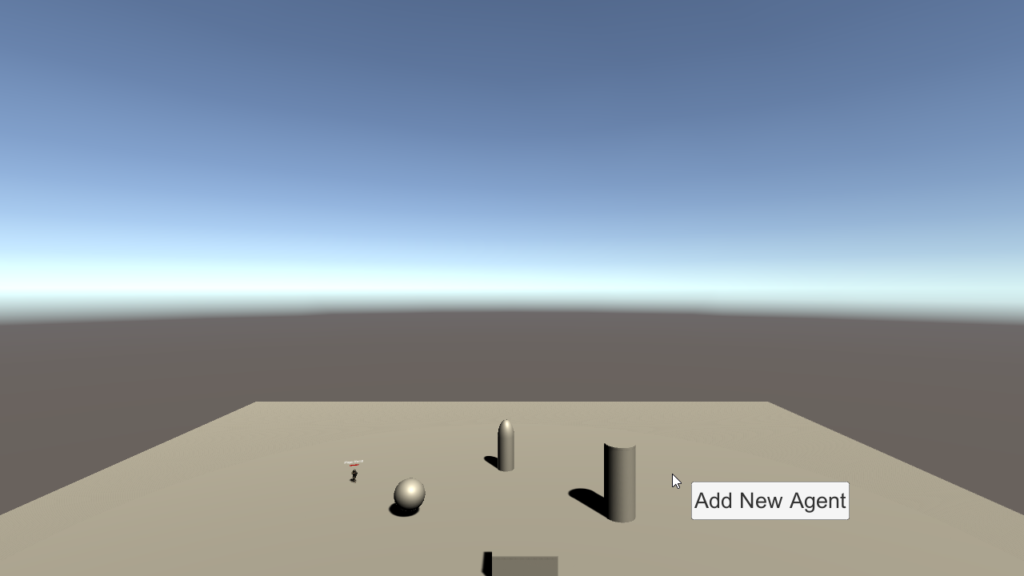

Collects available entity prefabs for inspection, modification and testing.

Demonstrate various ICT S&T research lines in a well-known format with very specific pass/fail parameters and training objectives.

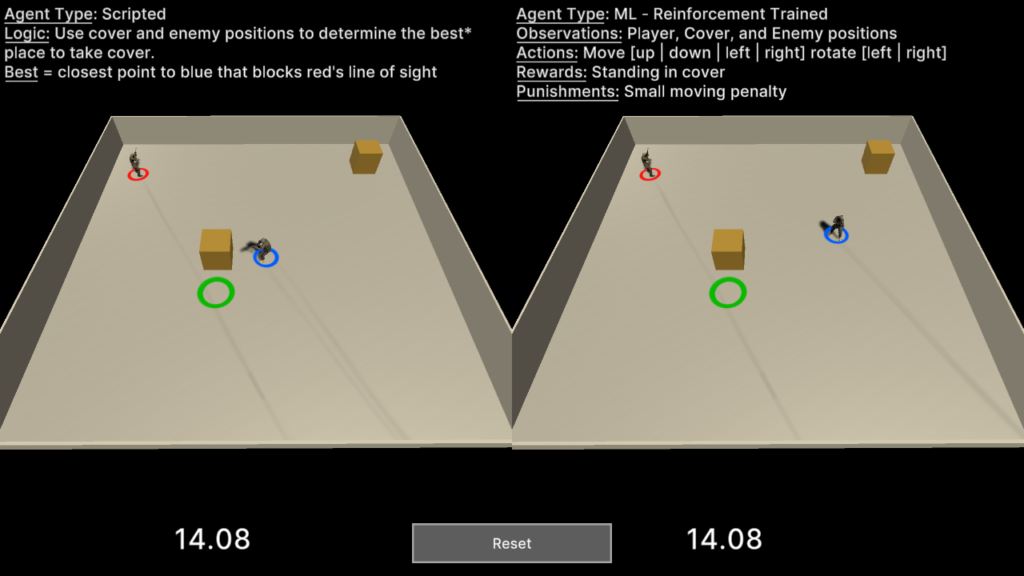

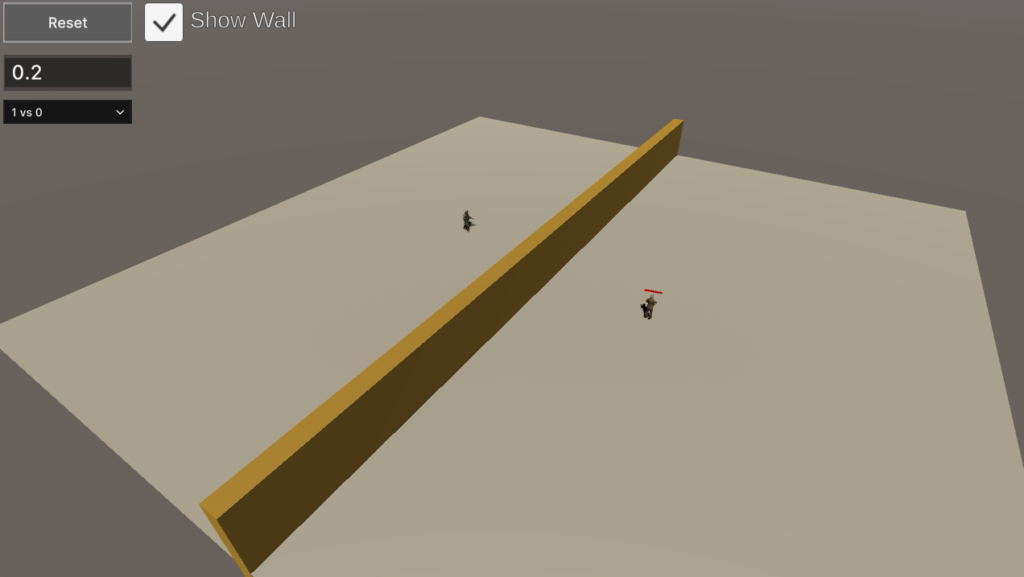

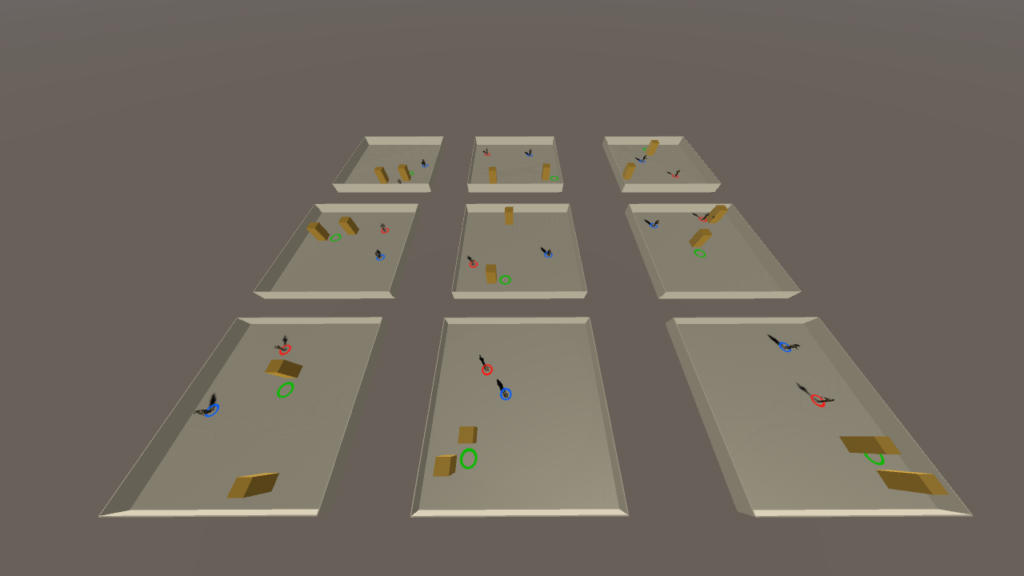

This scene demonstrates how one might analyze side-by-side the performance of two approaches for agent cover behaviour: scripted vs. Machine Learning (ML).

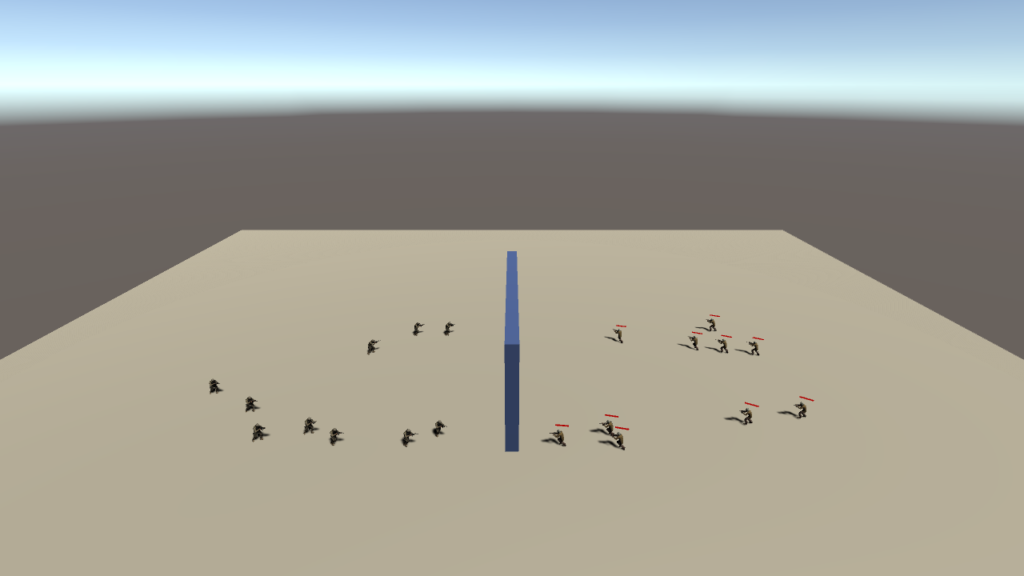

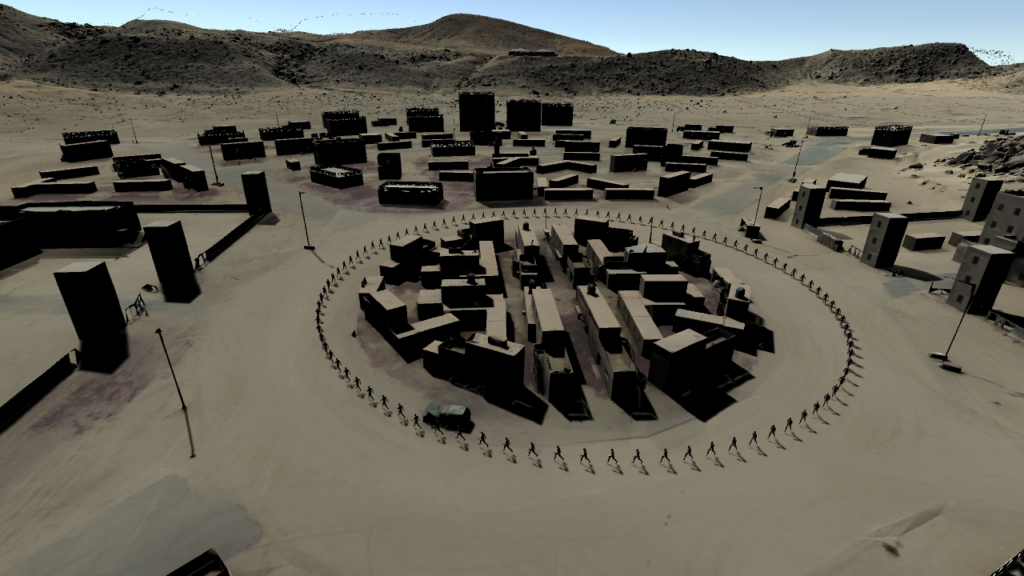

Define the unit spawn parameters of opposing Blue and Red agents. Autonomous behaviors enable each side to navigate obstacles and engage via line-of-sight in order to reach a waypoint.

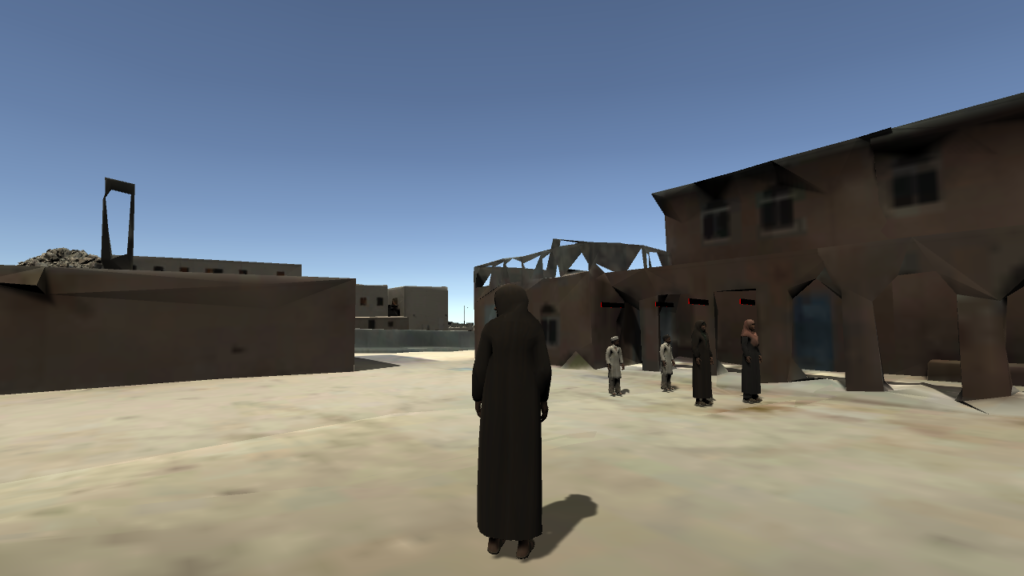

“DataMono” characters built by using bootstrappable data components for entities.

Spawn individual agents or squads, and then commandeer any single unit with full first-person fire/movement controls.

Establish groups for agents and relationships between the groups.

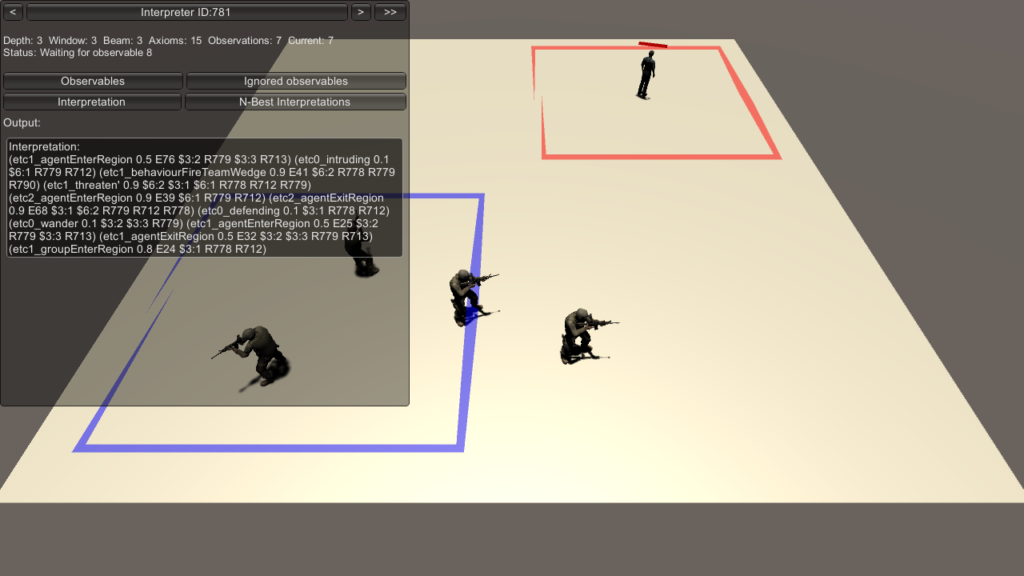

Demonstrates the systems for real-time interpretation of scenario events and narrative summarization.

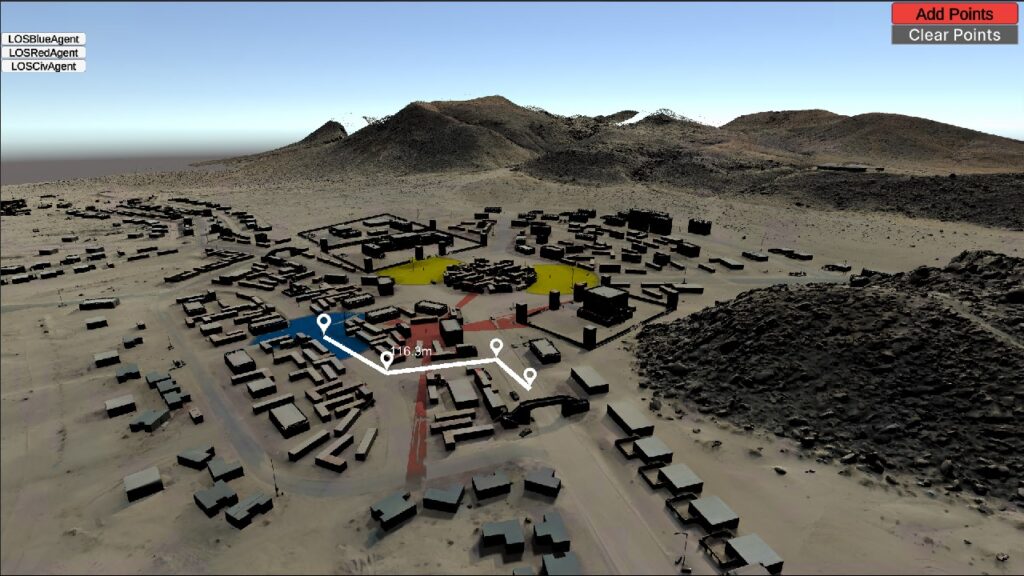

Demonstrates terrain map line-of-sight (LOS) system for BLUEFOR, OPFOR and CIV agents.

Interface systems for moving agents in scenarios.

Demonstrates autonomous agents using behavior trees (BT) in order to make decisions about patrolling and attacking.

This example scene shows how to create an interactive tour around a terrain using scenario events and custom AI behaviors.

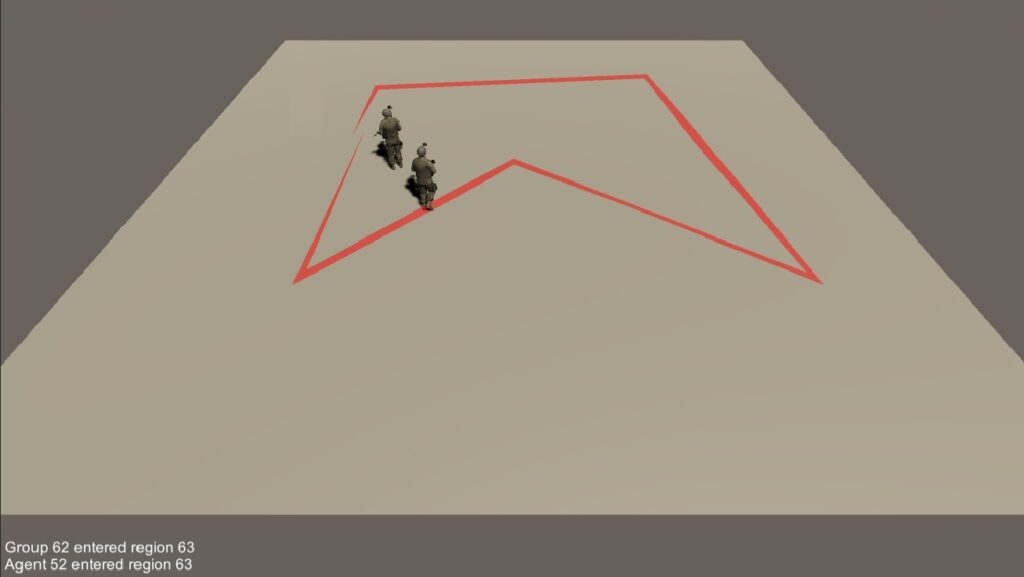

Track the movement of individual agents and groups as they move in and out of defined polygonal regions.

Red vs Blue networked competitive multiplayer on various terrain maps with dismounted or vehicular units.

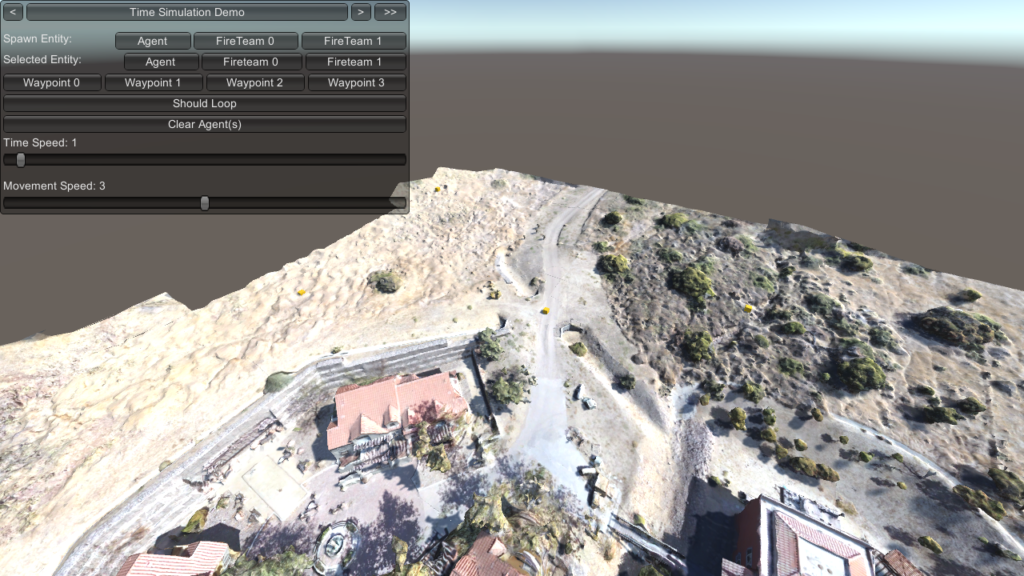

Demonstrates how to control simulation time speed and unit movement speed.

Rapid training of mlAgent units for modelling cover behaviour by inference. This scene links to an external python application over Unity TCP containing user-defined observations created in an academy for which the unit “brains” undergo training.

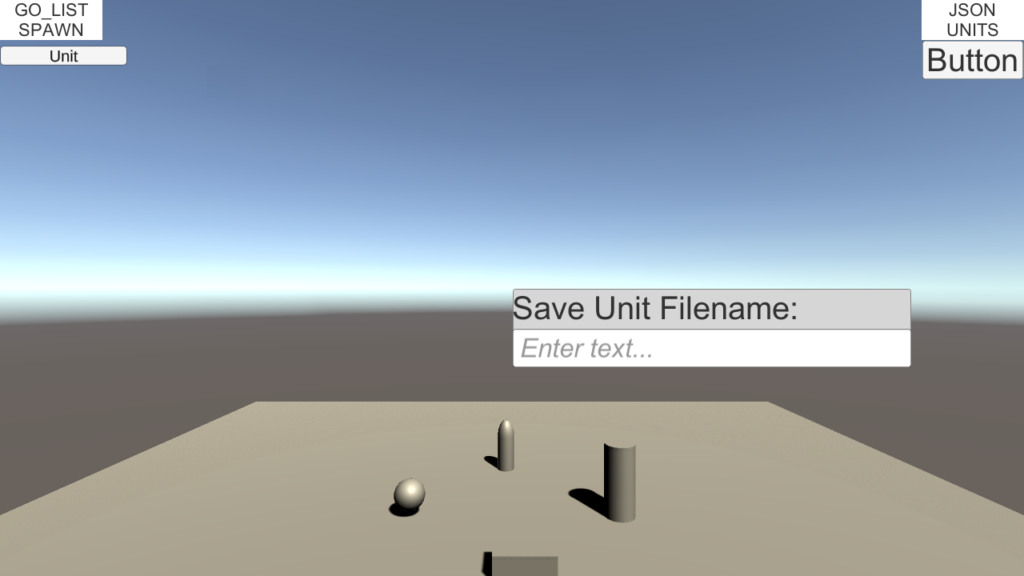

Save agents and/or groups for later retrieval, allowing them to be loaded into the scene via custom menus.

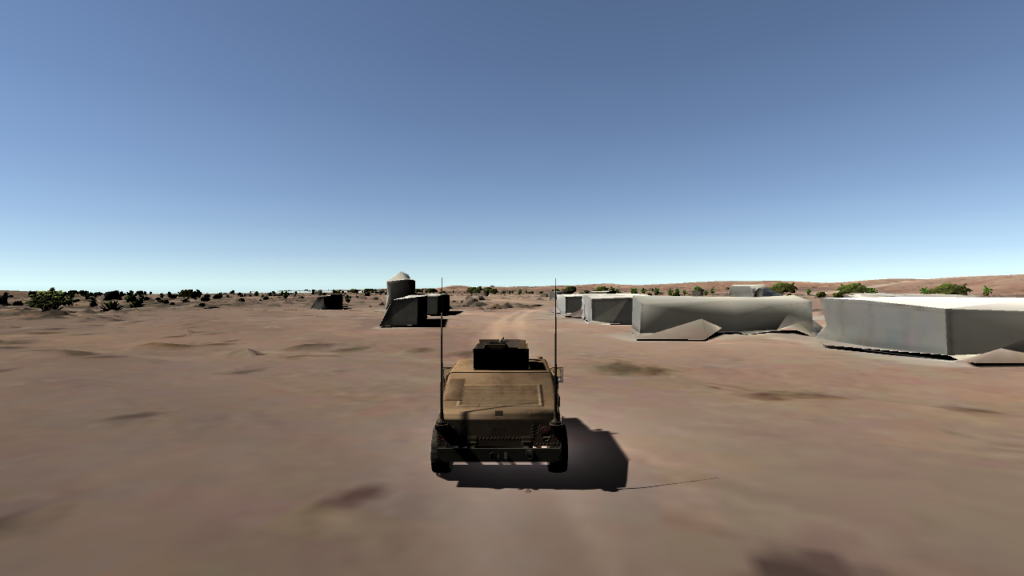

Navigate terrain maps with “drivable” vehicles through basic keyboard or gamepad input. Cycle multiple camera angles in and around the vehicle for observation of variables in the environment that affect physics of the vehicles. An overlay displays vehicle motion statistics in real-time.

Configure a scalability test with generic in-game agents in real-time and record performance data locally and in the cloud for later analysis.