Shows how to leverage commodity audio-visual sensing AI services through REST calls, using RIDE’s common web services system.

Shows how to leverage commodity audio-visual sensing AI services through REST calls, using RIDE’s common web services system.

Leverages RIDE’s generic Sensing C# interface, where a (webcam) image is sent to a server and resulting analysis data is received.

Initially supported service is Microsoft Azure Face, which provides:

Note, Characteristics and Emotion values recently deprecated.

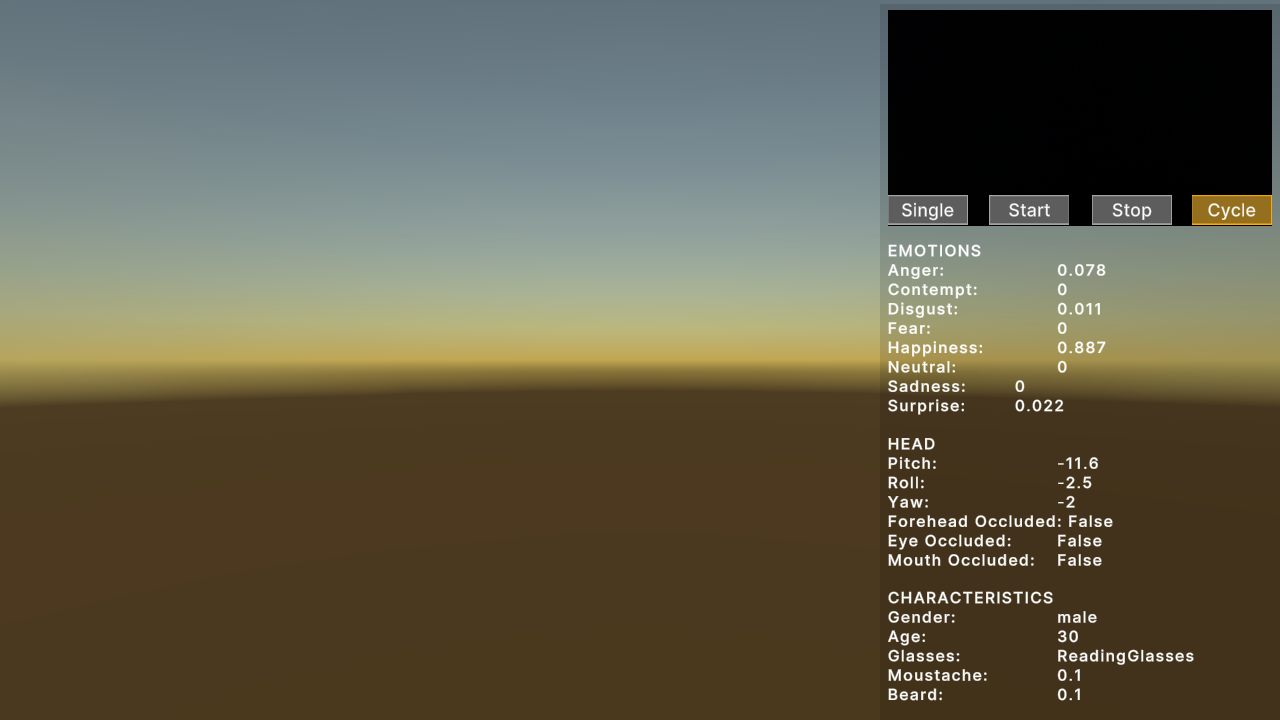

Make sure you have a webcam set up and run the scene. Your webcam view should be seen at the top right. Click Cycle if you have multiple webcams.

The Single button sends a single webcam image to the server. Results are shown underneath.

The Start button starts the process of sending an image every second. Stop stops this process.

\Assets\Ride\Examples\Services\Sensing\ExampleFace.unity

The main script is ExampleFace.cs in the same folder.

Any Sensing option implements the ISensingSystem C# Interface. These systems provide the main building blocks to interface with a sensing service:

ISensingSystem m_azureFace;

The specific endpoint and authentication information is defined through RIDE’s configuration system:

RideConfigSystem m_configSystem = Globals.api.systemAccessSystem.GetSystem<RideConfigSystem>();

string requestParameters = "returnFaceId=true&returnFaceLandmarks=false&returnFaceAttributes=age,gender,headPose,smile,facialHair,glasses,emotion,hair,makeup,occlusion,accessories,blur,exposure,noise";

m_uriAzureFace = m_configSystem.config.azureFace.endpoint + "/face/v1.0/detect?" + requestParameters;

var azureFaceComponent = new GameObject("AzureFaceSystem").AddComponent<AzureFaceSystem>();

azureFaceComponent.m_uri = m_uriAzureFace;

azureFaceComponent.m_endpointKey = m_configSystem.config.azureFace.endpointKey;

m_azureFace = azureFaceComponent;

The Sensing system encapsulates the input into an SensingRequest object. This user input is sent through the RIDE common web services system. The results are encapsulated into an SensingResponse object. A provided callback function allows a client (in this case this ExampleFace) what specifically to do with the result. The Sensing system itself handles much of the work, so that the client only requires one line to send the user input:

Globals.api.systemAccessSystem.GetSystem<ISensingEmotionSystem>(m_azureFace.id).AnalyzeEmotions(m_imageData, OnCompleteEmotion);

Referenced by ExampleGroups script for group definitions.