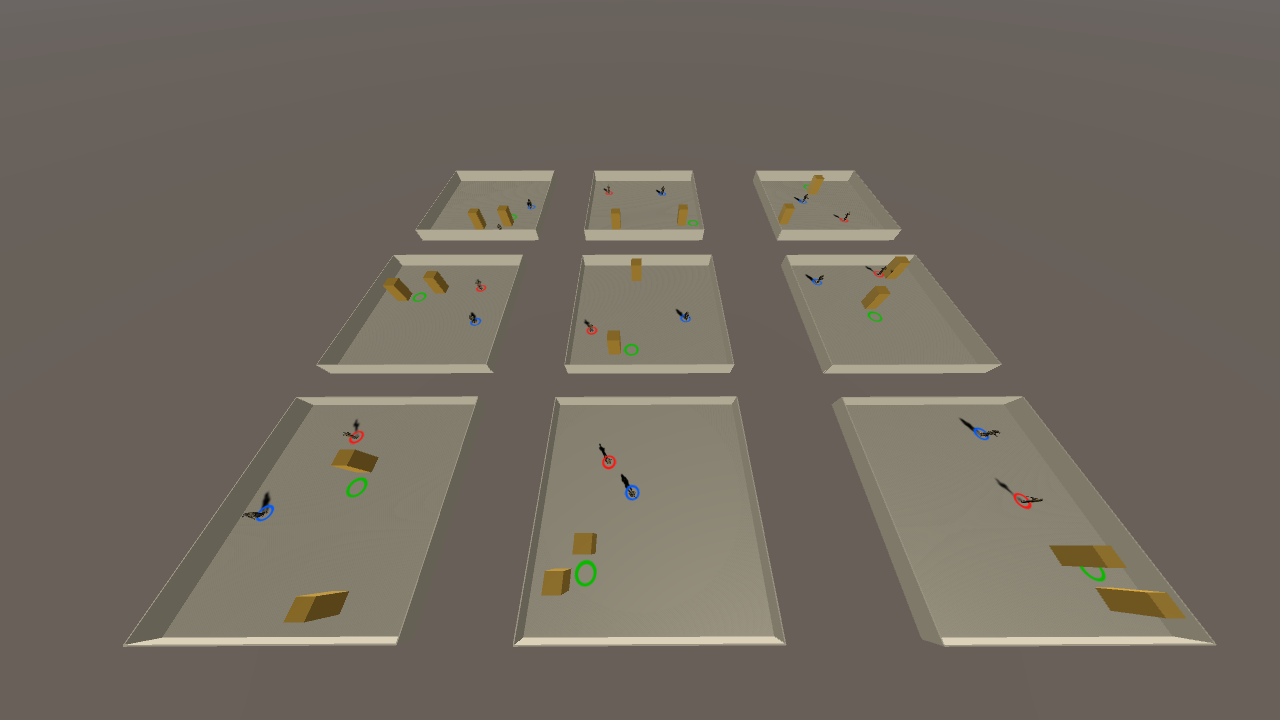

Rapid training of mlAgent units for modelling cover behaviour by inference. This scene links to an external python application over Unity TCP containing user-defined observations created in an academy for which the unit “brains” undergo training.

Rapid training of mlAgent units for modelling cover behaviour by inference. This scene links to an external python application over Unity TCP containing user-defined observations created in an academy for which the unit “brains” undergo training.

Setup the python environment following these steps:

https://github.com/Unity-Technologies/ml-agents/blob/release_12_docs/docs/Installation.md

Approximate Procedure:

In order to utilize this example, you’ll first need to acquire the following Unity ML-Agents Toolkit core components: ml-agents-0.15.1, ml-agents-env, and ml-agents-release_12. Next, add the ML Agents v1.0.7 python training environment, which can be obtained through the Unity Package Manager.

Make Sure RIDE_ML_AGENTS is defined in the project scripting defines.

After completion, open a command window prompt at:

/ml-agents/ml-agents-env

Enter the commands:

To start the training programming, enter the following command:

Then hit play in the Unity scene ExampleTrainingTakeCover scene and you will see the agents begin to train.

To watch how effective the training is, open a new command prompt in /ml-agents/ml-agents-env and enter the following:

Assets/Ride/Examples/Behaviours/MLBehaviours/ExampleTrainingTakeCoverML.unity

Utilize mlAgent for your scene with the ExampleTrainingTakeCoverML script and TrainingArea_TakeCoverML prefab that contains the TrainingArea_TakeCover script, floor/walls, cover, goals, Agent (script), Enemy, and mlAgent objects.

First, add ExampleTrainingTakeCoverML script to an object in your scene.

Next, import the TrainingArea_TakeCoverML prefab into your scene to train agents for cover behavior in conjunction with an external python application.

This prefab that contains the TrainingArea_TakeCover script, floor/walls, cover, goals, Agent (script), Enemy, and mlAgent objects.

Create a prefab variant of TrainingArea_TakeCover to begin constructing your own training environments.

Recommend building a comparison scene to evaluate two approaches to your problem set. See Behaviour Comparison as an example for how to structure a side-by-side comparison of agent behaviour.